Share this

Floating Point Applications in a Rackmount Computer: GPGPU vs CPU

by Will Shirley on Oct 30, 2017 8:22:37 AM

GPGPU Compute Scales Excellently When Properly Deployed

GPGPU (General Purpose Graphics Processing Unit) computing has been around for more than a decade now, and many people in and out of the computing industry know that adding a GPU to a system can accelerate computing tasks such as simulations and factorization.

I’ve been evangelizing the benefits of GPGPU compute acceleration to customers for years, but I’ve never come up with a concrete way to illustrate how well performance scales linearly when you add GPUs to a properly compatible workflow.

Until now.

Folding@Home

Several weeks ago, I rediscovered a project that originally piqued my interest in high performance computing, Folding@Home.

One of the original distributed computing projects alongside storied stalwarts SETI@Home and GIMPS, “F@H” allows users to donate their spare computer processing power to simulate protein folding, or to test out new drug designs. It is thought that protein misfolding is a significant contributing factor in many diseases, namely, Alzheimer’s and many forms of cancers.

Folding@Home is significant in that it helped pioneer GPGPU accelerated scientific computing, deploying the first GPU-enabled client in 2006, and pushing the boundaries of distributed computing in a variety of ways.

Since then, GPU clients of the project have grown to be the most powerful contributors to the project, owing to the highly-parallelizable nature of the Markov State Models that F@H uses to simulate protein folding.

Part of the reason, in my opinion, that Folding@Home has remained so popular since its release in 2000 is that the project tracks and rewards contributions through a points system that is made freely available for 3rd parties to track and graph.

In addition, the client can join “Teams” that can collectively compete against each other. For the purposes of this experiment, we’ll be using the data collated by the long-running Extreme Overclocking tracker under the moniker TrentonSystems.

What are we testing?

| TEST RIG | ||

| Chassis | THS4086, 4U HDEC Series computer |

16.25” depth, front access hot-swap fans |

| Host Board | HEP8225 host board |

Dual Xeon E5-2680 v4 (Broadwell) 28 cores/56 threads |

| Backplane | HDB8228 backplane | 8 Slots, 4@ Gen3 x16, 4@ Gen3 x4 |

| Power Supply | 860w ATX | Higher wattages available |

| OS | Windows Server 2016 Standard | GUI is installed |

| Storage | Emphase 25GB Industrial SSD | SATA/600 |

| GPUs | Geforce GTX 970 | Zotac brand, 4GB DDR5, stock clocks |

This platform is ideal in that it can accommodate 4 double-wide GPUs without PCIe switches, owing to the 80 lanes of PCIe passed to the backplane through HDEC architecture, thus, when GPUs are added to the mix, we should have perfect performance scaling.

Methodology

A fresh copy of Windows Server 2016 was installed and only the drivers necessary to bring the system up, in addition to CoreTemp to monitor processor temperatures, MSI’s Afterburner program for GPU monitoring, and the Folding@Home client.

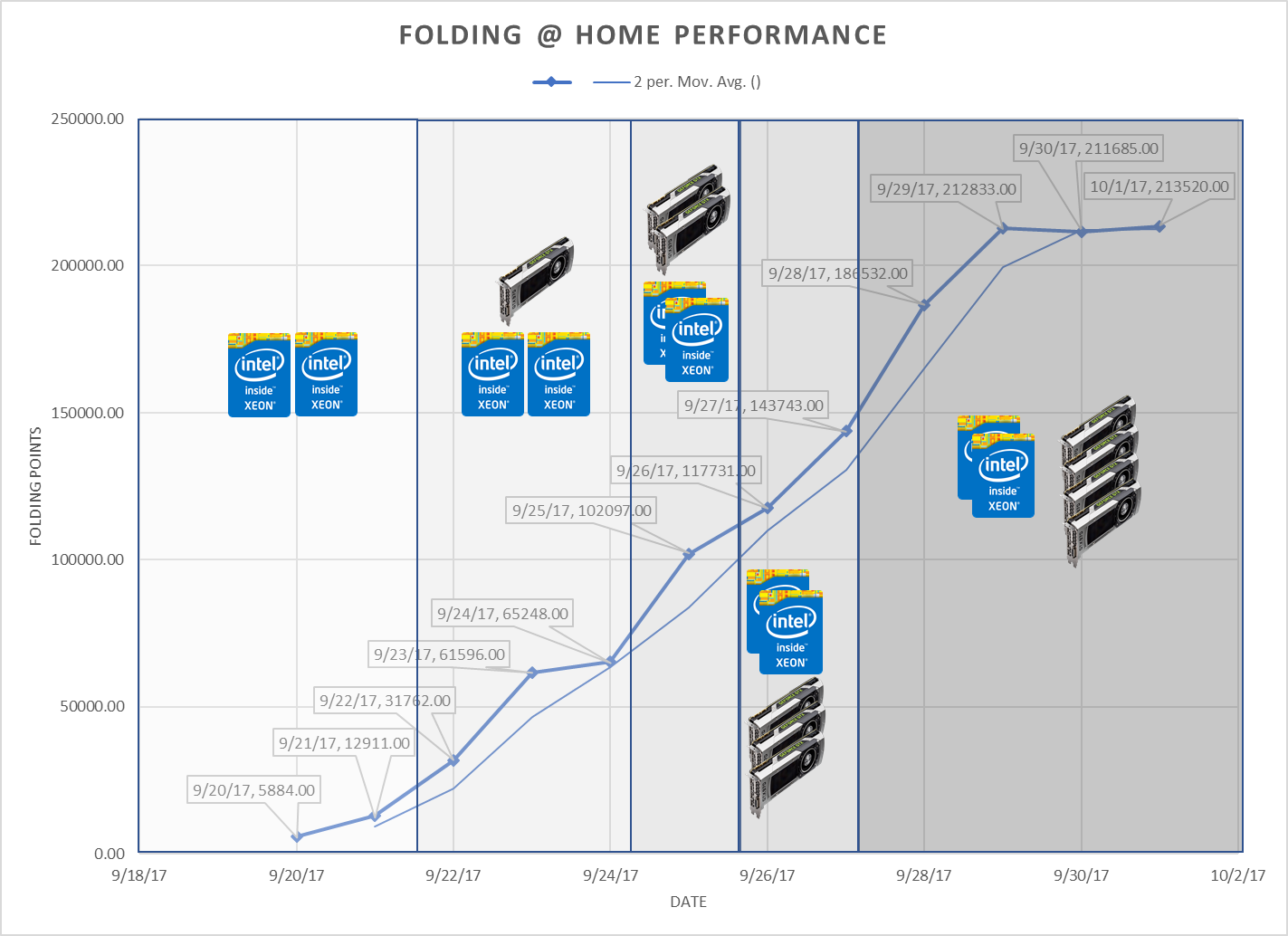

Testing methodology was to obtain a baseline score with processors only for 24 hours. Once that was obtained, I shut down the system and added an additional GPU as close to every 24 hours as was feasible.

Note: The reasoning behind the 24-hour test cycle is the fact that not all Work Units that the project is currently computing are created equally; some are far more difficult and require up to 6+ hours of continuous compute time. Others may take as little as an hour on a GPU and the F@H stats are only tabulated every 3 hours. 24 hours should provide a reasonable sample size to be confident in the data even with the variations.

The system was started at 1600 on September 20, 2017 for the processor only run.

Then, as closely as possible to a consistent 24 hours, the system has its worker threads paused, the system was shut down, and additional graphics cards were added.

The system was started at 1600 on September 20, 2017 for the processor only run.

|

Daily Production |

Notes |

|

|

9/20/17 |

5884.00 |

~8 hours of CPU only time |

|

9/21/17 |

12911.00 |

~16 hours of CPU only time, added GPU at 1600 |

|

9/22/17 |

31762.00 |

|

|

9/23/17 |

61596.00 |

|

|

9/24/17 |

65248.00 |

Downtime & corrupt workunits, had to reset data buffer due to GPU config error. |

|

9/25/17 |

102097.00 |

Added GPU 2 in the afternoon |

|

9/26/17 |

117731.00 |

Added GPU 3 in the afternoon |

|

9/27/17 |

143743.00 |

Added GPU 4 in the afternoon |

|

9/28/17 |

186532.00 |

|

|

9/29/17 |

212833.00 |

|

|

9/30/17 |

211685.00 |

|

|

10/1/17 |

213520.00 |

|

Figure 2 Annotated Chart

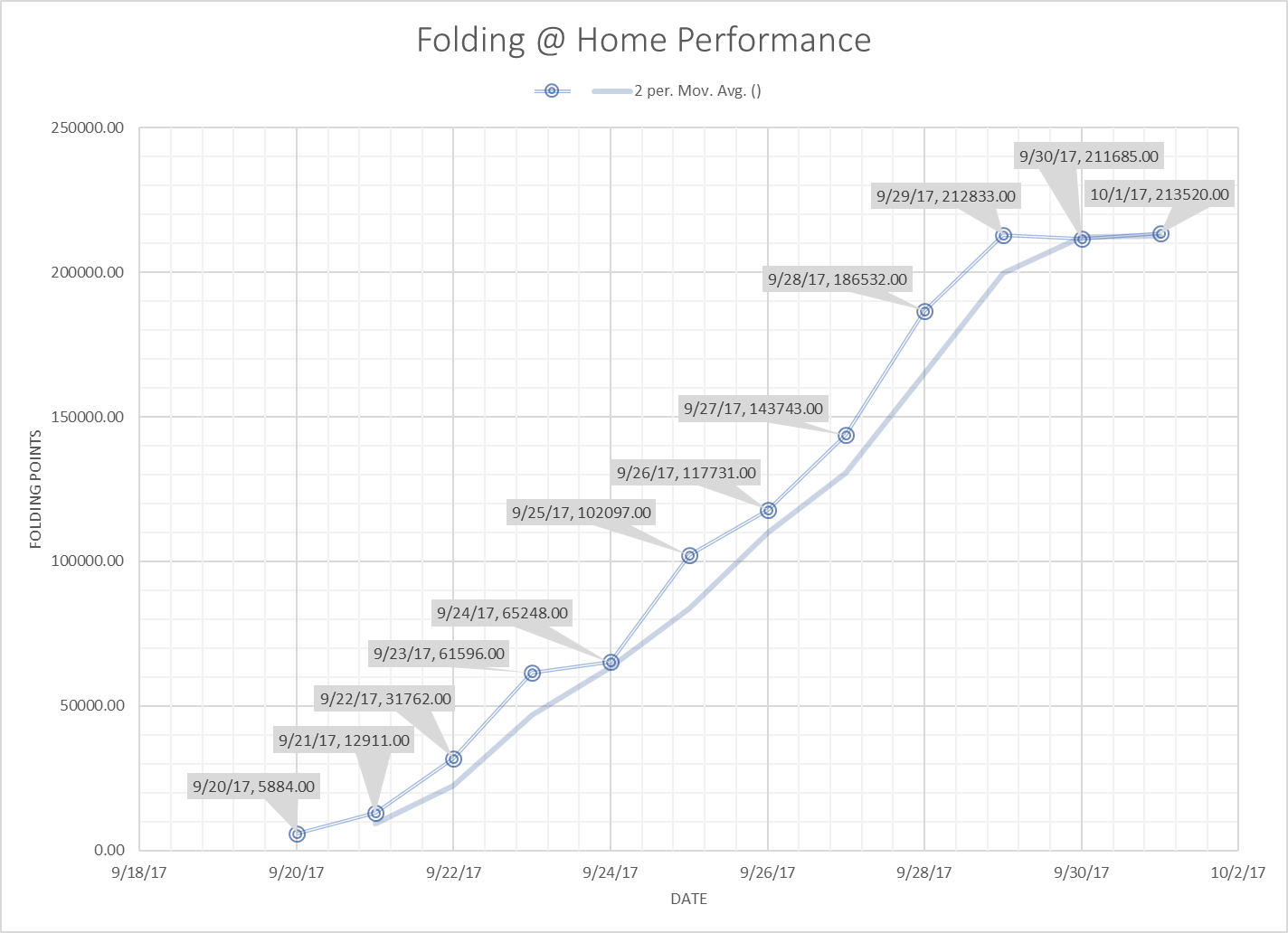

Figure 3, Unannotated Chart

Analysis and Conclusions

The addition of just one GPU increased the performance of the overall system immensely, in and of itself as illustrated in the period between 9/22 and 9/24, demonstrating just how much better a GPU-powered system is at being able to crunch large problems.

In fact, if you add up the totals for 9/20 and 9/21, and compare to the 2-day totals for the 1-GPU configuration, is nearly a 400% performance increase. Additionally, performance from 1 GPU to 4 GPUs is an astounding 1000% increase in computing power.

With that kind of potential increase available, every high-performance computing or simulation system designer should be, at least, considering how they can leverage GPGPUs to accelerate their application.

Trenton Systems is a NVIDIA Preferred Solution partner, so we have the expertise and factory backing to help you deploy a GPGPU-accelerated compute solution into your application.

Interested in learning more? Get in touch to discover how Team Trenton can arm you with the right tools to take on any mission with speed, efficiency, and maximum protection.

Share this

- High-performance computers (42)

- Military computers (38)

- Rugged computers (32)

- Cybersecurity (25)

- Industrial computers (25)

- Military servers (24)

- MIL-SPEC (20)

- Rugged servers (19)

- Press Release (17)

- Industrial servers (16)

- MIL-STD-810 (16)

- 5G Technology (14)

- Intel (13)

- Rack mount servers (12)

- processing (12)

- Computer hardware (11)

- Edge computing (11)

- Rugged workstations (11)

- Made in USA (10)

- Partnerships (9)

- Rugged computing (9)

- Sales, Marketing, and Business Development (9)

- Trenton Systems (9)

- networking (9)

- Peripheral Component Interconnect Express (PCIe) (7)

- Encryption (6)

- Federal Information Processing Standards (FIPS) (6)

- GPUs (6)

- IPU (6)

- Joint All-Domain Command and Control (JADC2) (6)

- Server motherboards (6)

- artificial intelligence (6)

- Computer stress tests (5)

- Cross domain solutions (5)

- Mission-critical servers (5)

- Rugged mini PCs (5)

- AI (4)

- BIOS (4)

- CPU (4)

- Defense (4)

- Military primes (4)

- Mission-critical systems (4)

- Platform Firmware Resilience (PFR) (4)

- Rugged blade servers (4)

- containerization (4)

- data protection (4)

- virtualization (4)

- Counterfeit electronic parts (3)

- DO-160 (3)

- Edge servers (3)

- Firmware (3)

- HPC (3)

- Just a Bunch of Disks (JBOD) (3)

- Leadership (3)

- Navy (3)

- O-RAN (3)

- RAID (3)

- RAM (3)

- Revision control (3)

- Ruggedization (3)

- SATCOM (3)

- Storage servers (3)

- Supply chain (3)

- Tactical Advanced Computer (TAC) (3)

- Wide-temp computers (3)

- computers made in the USA (3)

- data transfer (3)

- deep learning (3)

- embedded computers (3)

- embedded systems (3)

- firmware security (3)

- machine learning (3)

- Automatic test equipment (ATE) (2)

- C6ISR (2)

- COTS (2)

- COVID-19 (2)

- CPUs (2)

- Compliance (2)

- Compute Express Link (CXL) (2)

- Computer networking (2)

- Controlled Unclassified Information (CUI) (2)

- DDR (2)

- DDR4 (2)

- DPU (2)

- Dual CPU motherboards (2)

- EW (2)

- I/O (2)

- Military standards (2)

- NVIDIA (2)

- NVMe SSDs (2)

- PCIe (2)

- PCIe 4.0 (2)

- PCIe 5.0 (2)

- RAN (2)

- SIGINT (2)

- SWaP-C (2)

- Software Guard Extensions (SGX) (2)

- Submarines (2)

- Supply chain security (2)

- TAA compliance (2)

- airborne (2)

- as9100d (2)

- chassis (2)

- data diode (2)

- end-to-end solution (2)

- hardware security (2)

- hardware virtualization (2)

- integrated combat system (2)

- manufacturing reps (2)

- memory (2)

- mission computers (2)

- private 5G (2)

- protection (2)

- secure by design (2)

- small form factor (2)

- software security (2)

- vRAN (2)

- zero trust (2)

- zero trust architecture (2)

- 3U BAM Server (1)

- 4G (1)

- 4U (1)

- 5G Frequencies (1)

- 5G Frequency Bands (1)

- AI/ML/DL (1)

- Access CDS (1)

- Aegis Combat System (1)

- Armed Forces (1)

- Asymmetric encryption (1)

- C-RAN (1)

- COMINT (1)

- Cloud-based CDS (1)

- Coast Guard (1)

- Compliance testing (1)

- Computer life cycle (1)

- Containers (1)

- D-RAN (1)

- DART (1)

- DDR5 (1)

- DMEA (1)

- Data Center Modular Hardware System (DC-MHS) (1)

- Data Plane Development Kit (DPDK) (1)

- Defense Advanced Research Projects (DARP) (1)

- ELINT (1)

- EMI (1)

- EO/IR (1)

- Electromagnetic Interference (1)

- Electronic Warfare (EW) (1)

- FIPS 140-2 (1)

- FIPS 140-3 (1)

- Field Programmable Gate Array (FPGA) (1)

- Ground Control Stations (GCS) (1)

- Hardware-based CDS (1)

- Hybrid CDS (1)

- IES.5G (1)

- ION Mini PC (1)

- IP Ratings (1)

- IPMI (1)

- Industrial Internet of Things (IIoT) (1)

- Industry news (1)

- Integrated Base Defense (IBD) (1)

- LAN ports (1)

- LTE (1)

- Life cycle management (1)

- Lockheed Martin (1)

- MIL-S-901 (1)

- MIL-STD-167-1 (1)

- MIL-STD-461 (1)

- MIL-STD-464 (1)

- MOSA (1)

- Multi-Access Edge Computing (1)

- NASA (1)

- NIC (1)

- NIC Card (1)

- NVMe (1)

- O-RAN compliant (1)

- Oil and Gas (1)

- Open Compute Project (OCP) (1)

- OpenRAN (1)

- P4 (1)

- PCIe card (1)

- PCIe lane (1)

- PCIe slot (1)

- Precision timestamping (1)

- Product life cycle (1)

- ROM (1)

- Raytheon (1)

- Remotely piloted aircraft (RPA) (1)

- Rugged computing glossary (1)

- SEDs (1)

- SIM Card (1)

- Secure boot (1)

- Sensor Open Systems Architecture (SOSA) (1)

- Small form-factor pluggable (SFP) (1)

- Smart Edge (1)

- Smart NIC (1)

- SmartNIC (1)

- Software-based CDS (1)

- Symmetric encryption (1)

- System hardening (1)

- System hardening best practices (1)

- TME (1)

- Tech Partners (1)

- Total Memory Encryption (TME) (1)

- Transfer CDS (1)

- USB ports (1)

- VMEbus International Trade Association (VITA) (1)

- Vertical Lift Consortium (VLC) (1)

- Virtual machines (1)

- What are embedded systems? (1)

- Wired access backhaul (1)

- Wireless access backhaul (1)

- accredidation (1)

- aerospace (1)

- air gaps (1)

- airborne computers (1)

- asteroid (1)

- authentication (1)

- autonomous (1)

- certification (1)

- cognitive software-defined radios (CDRS) (1)

- command and control (C2) (1)

- communications (1)

- cores (1)

- custom (1)

- customer service (1)

- customer support (1)

- data linking (1)

- data recording (1)

- ethernet (1)

- full disk encryption (1)

- hardware monitoring (1)

- heat sink (1)

- hypervisor (1)

- in-house technical support (1)

- input (1)

- integrated edge solution (1)

- international business (1)

- licensed spectrum (1)

- liquid cooling (1)

- mCOTS (1)

- microelectronics (1)

- missile defense (1)

- mixed criticality (1)

- moving (1)

- multi-factor authentication (1)

- network slicing (1)

- neural networks (1)

- new headquarters (1)

- next generation interceptor (1)

- non-volatile memory (1)

- operating system (1)

- output (1)

- outsourced technical support (1)

- post-boot (1)

- pre-boot (1)

- private networks (1)

- public networks (1)

- radio access network (RAN) (1)

- reconnaissance (1)

- rugged memory (1)

- secure flash (1)

- security (1)

- self-encrypting drives (SEDs) (1)

- sff (1)

- software (1)

- software-defined radios (SDRs) (1)

- speeds and feeds (1)

- standalone (1)

- storage (1)

- systems (1)

- tactical wide area networks (1)

- technical support (1)

- technology (1)

- third-party motherboards (1)

- troposcatter communication (1)

- unlicensed spectrum (1)

- volatile memory (1)

- vpx (1)

- zero trust network (1)

- January 2025 (1)

- November 2024 (1)

- October 2024 (1)

- August 2024 (1)

- July 2024 (1)

- May 2024 (1)

- April 2024 (3)

- February 2024 (1)

- November 2023 (1)

- October 2023 (1)

- July 2023 (1)

- June 2023 (3)

- May 2023 (7)

- April 2023 (5)

- March 2023 (7)

- December 2022 (2)

- November 2022 (6)

- October 2022 (7)

- September 2022 (8)

- August 2022 (3)

- July 2022 (4)

- June 2022 (13)

- May 2022 (10)

- April 2022 (4)

- March 2022 (11)

- February 2022 (4)

- January 2022 (4)

- December 2021 (1)

- November 2021 (4)

- September 2021 (2)

- August 2021 (1)

- July 2021 (2)

- June 2021 (3)

- May 2021 (4)

- April 2021 (3)

- March 2021 (3)

- February 2021 (8)

- January 2021 (4)

- December 2020 (5)

- November 2020 (5)

- October 2020 (4)

- September 2020 (4)

- August 2020 (6)

- July 2020 (9)

- June 2020 (11)

- May 2020 (13)

- April 2020 (8)

- February 2020 (1)

- January 2020 (1)

- October 2019 (1)

- August 2019 (2)

- July 2019 (2)

- March 2019 (1)

- January 2019 (2)

- December 2018 (1)

- November 2018 (2)

- October 2018 (5)

- September 2018 (3)

- July 2018 (1)

- April 2018 (2)

- March 2018 (1)

- February 2018 (9)

- January 2018 (27)

- December 2017 (1)

- November 2017 (2)

- October 2017 (3)

/Trenton%20Systems%20Circular%20Logo-3.png?width=50&height=50&name=Trenton%20Systems%20Circular%20Logo-3.png)

No Comments Yet

Let us know what you think