Share this

Giant List of Intel’s Advanced Technologies

by Brett Daniel on Jul 23, 2020 4:11:55 PM

Photo: Intel processors utilize a multitude of advanced technologies that we'll cover in this blog post.

Intel's processors are equipped with a multitude of technologies that can improve the overall performance of your rugged server or workstation.

Intel technologies provide your server with a variety of performance perks, from faster data encryption, to increased hardware-based security, to additional protection from viruses and malware, and much, much more.

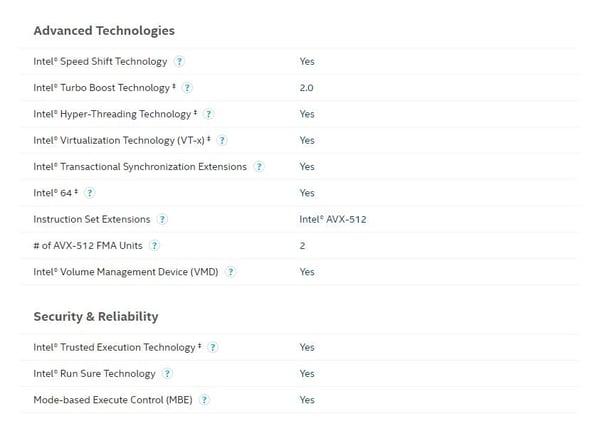

Screenshot: If you've accessed Intel's Product Specifications library before, you've likely seen a long list of advanced technologies that looks like the above image, and if you've ever wondered what each of them do, then you've definitely come to the right place. We explain each one in this blog post.

You've likely seen most, if not all, of these Intel technologies while viewing a specific processor listed in Intel's Product Specifications library, but just what do these advanced technologies do, exactly?

This blog post will provide an overview of Intel’s advanced technologies, including those found exclusively in Xeon processors, such as Intel's Deep Learning Boost and Volume Management Device.

Graphic: Because Trenton Systems is a member of the Intel IoT Solutions Alliance, it has unique access to Intel’s Xeon processors, and in turn, the advanced technologies that support them.

Every day at our Lawrenceville, Georgia USA facility, we design, manufacture, assemble and stress-test rugged servers and workstations equipped with Xeon motherboards that utilize Intel’s advanced technologies.

Keep reading to gain more insight into how these technologies can benefit your program or application.

Table of Contents

- Intel Deep Learning Boost

- Intel Resource Director Technology

- Intel Speed Shift Technology

- Intel Turbo Boost Technology

- Intel vPro Platform

- Intel Hyper-Threading Technology

- Intel Virtualization Technology (VT-x)

- Intel Virtualization Technology for Direct I/O (VT-d)

- Intel VT-X with Extended Page Tables (EPT)

- Intel Transactional Synchronization Extensions (TSX)

- Intel 64

- Instruction Set Extensions

- # of AVX-512 FMA Units

- Enhanced Intel SpeedStep Technology

- Intel Volume Management Device (VMD)

- Intel AES New Instructions

- Intel Trusted Execution Technology (TXT)

- Execute Disable Bit

- Intel Run Sure Technology (RST)

- Mode-Based Execution Control (MBE)

- Conclusion

What is Intel Deep Learning Boost?

Intel’s Deep Learning Boost (DL Boost) technology enhances the performance of Second Generation Xeon Scalable processors powering artificial intelligence applications.

DL Boost is a relatively new set of embedded processor technologies. By extending the company’s AVX-512 instruction set using a Vector Neural Network Instruction (VNNI) set, DL Boost accelerates AI inference performance, which is essentially how an application’s AI algorithm applies logic to produce new information.

The actual “boost” behind DL Boost is actualized through its utilization of only one instruction for INT8 convolutions, rather than the three separate AVX-512 instructions found in previous-generation processors. DL Boost actually combines these three instructions into one, which Intel says has three main benefits:

- Maximization of compute resources

- Better utilization of the cache

- Avoidance of potential bandwidth bottlenecks

Intel says that DL Boost is ideal for image recognition, object detection, recommendation systems, speech recognition, language translation and numerous other AI-centered applications.

What is Intel Resource Director Technology?

Intel’s Resource Director Technology (RDT) is a framework for resource allocation that consists of five individual technologies:

- Cache Allocation Technology (CAT)

- Code and Data Prioritization (CDP)

- Memory Bandwidth Allocation (MBA)

- Cache Monitoring Technology (CMT)

- Memory Bandwidth Monitoring (MBM)

Cache Allocation Technology

Cache Allocation Technology (CAT) allows operating systems and virtual machine managers (VMMs) to control data placement in the last-level cache (LLC or L3), thereby controlling the amount of cache space that can be consumed by a given thread, app, virtual machine (VM) or container.

CAT benefits large cloud data center and other multi-core platform environments by prioritizing critical applications over background processes, which may consume an excessive amount of LLC, and in turn, leech performance from the former.

Code and Data Prioritization

Code and Data Prioritization (CDP) is an extension of CAT and also involves the LLC. According to Intel, CDP enables software control over code and data placement in the LLC by enabling separate masks for code and data.

“Key usage models include protecting the code of certain applications on the L3 cache, including those with large code footprints and large data footprints, which may otherwise contend for LLC space. Additionally, certain latency-sensitive applications such as communications apps may benefit as code is more likely to be in the L3 cache when needed, rather than needing to be fetched from memory.”

- Khang T Nguyen, Intel, Code and Data Prioritization: Introduction and Usage Models in the Intel Xeon Processor E5 v4 Family

Memory Bandwidth Allocation

Intel introduced Memory Bandwidth Allocation (MBA), an extension of CAT’s shared resource infrastructure, with its Xeon Scalable processor family. Essentially, MBA is a technology used by operating systems and VMMs for memory bandwidth management and distribution. At the software level, it dictates the availability of memory bandwidth to certain workloads and reduces the likelihood of interference from consolidated applications.

Intel markets MBA as a solution to the heavy system load constraints encountered by memory bandwidth in both data center and enterprise environments, citing the consolidation of virtual machines onto a server system as one example.

“In other cases, the performance or responsiveness of certain applications might depend on having a given amount of memory bandwidth available to perform at an acceptable level, and the presence of other consolidated applications may lead to performance interference effects.”

- Andrew J Herdrich, Marcel David Cornu, Khawar Munir Abbasi, Intel, Introduction to Memory Bandwidth Allocation

Cache Monitoring Technology

Intel introduced Cache Monitoring Technology (CMT) in 2014 with its Xeon E5 2600 v3 product family. CMT allows operating systems and VMMs to determine cache usage by applications through software-level checkups of the L3 or LLC cache.

In heavy-workload environments, CMT benefits shared resources, such as cache space and memory bandwidth, through four mechanisms:

- To detect if the platform supports this monitoring capability (via CPUID)

- For an OS or VMM to indicate a software-defined ID for each of the applications or VMs that are scheduled to run on a core. This ID is called the Resource Monitoring ID (RMID)

- To monitor cache occupancy on a per RMID basis

- For an OS or VMM to read LLC occupancy for a given RMID at any time

Memory Bandwidth Monitoring

Intel introduced Memory Bandwidth Monitoring with its Xeon E5 v4 processor family. It serves as an extension or complement of CMT by including new event codes per-RMID, per-thread, per-app or per-VM tracking of L3 or LLC cache usage per thread.

Essentially, while CMT is detecting noise in the cache and addressing any over-utilization of shared resources, the MBA is overseeing low-cache-sensitivity applications and assisting the CMT through per-simultaneous per-thread bandwidth monitoring.

“CMT is effective at detecting noisy-neighbors in the cache, understanding the cache sensitivity of applications, and debugging performance problems. To fully understand application behavior, however, monitoring of memory bandwidth is also needed, as some apps have low cache sensitivity due to either very small working sets (compute-bound) or extremely large working sets that do not fit well in the cache (streaming applications).”

- Khang T Nguyen, Intel, Introduction to Memory Bandwidth Monitoring in the Intel Xeon Processor E5 v4 Family

What is Intel Speed Shift Technology?

“Intel Speed Shift Technology (SST) uses hardware-controlled P-states to deliver dramatically quicker responsiveness with single-threaded, transient (short-duration) workloads, such as web browsing, by allowing the processor to more quickly select its best operating frequency and voltage for optimal performance and power efficiency.”

- Intel

According to Microsoft, P-states scale the frequency and voltage at which the processor runs to reduce CPU power consumption. The operating system typically controls this P-state request process, and in turn, the CPU changes its frequency and voltage based on the required workload.

Intel’s Speed Shift Technology, however, effectively redistributes P-state control from the operating system to the CPU itself, resulting in more efficient use of power and a significant performance boost in regular tasks, such as browsing the web and document design or editing.

What is Intel Turbo Boost Technology?

Intel Turbo Boost Technology (TBT) is perhaps the most well-known advanced Intel CPU technology. Turbo Boost allows the CPU to run at a speed beyond its listed frequency.

When reviewing the specifications of a particular processor, such as this Intel Xeon Silver 4210, you’ll often see a Max Turbo Frequency listed. This is the fastest clock speed at which the processor can operate beyond its base frequency. So, in the case of the Silver 4210, the base frequency is 2.40 GHz, and the Max Turbo Frequency is 3.20 GHz.

The main benefit of Intel TBT is the improved performance of single- and multi-threaded applications. Conditions must be appropriate for the processor to enter turbo mode, however. This varies based on a few factors, including:

- Type of workload

- Number of active cores

- Estimated current consumption

- Estimated power consumption

- Processor temperature

What is the Intel vPro Platform?

The famous “Built for Business” technology, Intel’s vPro Platform is a set of hardware and security features designed to improve and streamline the performance of business computers and servers.

The state of an Intel processor’s support for vPro is referred to by the company as Intel vPro Platform Eligibility. Processors that support the technology are often referred to as just “Intel vPro processors.” A comprehensive list of Intel processors built on the Intel vPro Platform can be found here.

Intel created vPro to deliver benefits across four major areas of computing in business environments: performance, security, manageability and stability.

These features include, but are not limited to, technologies such as Intel Optane Memory, Intel Hardware Shield, Trusted Platform Module (TPM) 2.0 and Intel Active Management (ATM) Technology.

One of the main benefits is remote manageability through ATM. Whether devices are off or even out of range, the vPro Platform allows information technology professionals to access them from afar for monitoring and repairs.

Another major benefit is vPro’s inclusion of TPM 2.0, which is typically a requirement for rugged servers and workstations deployed by the United States military.

For a list of vPro technologies and to learn more about how each technology can benefit your system, read Intel’s A Foundation of Business Computing or visit their vPro Platform product page.

What is Intel Hyper-Threading Technology?

Intel’s Hyper-Threading Technology (HTT) incorporates two processing threads per physical CPU core, which increases throughput and results in improved performance for multi-threaded applications.

Intel describes Hyper-Threading Technology as like “going from a one-lane highway to a two-lane highway” in terms of processor throughput.

In short, each core can accomplish two things at once with HTT, which improves application performance and multitasking while ensuring the consistency of the system’s overall performance.

Intel lists three main benefits of HTT:

- Run demanding applications simultaneously while maintaining system responsiveness

- Keep systems protected, efficient and manageable while minimizing impact on productivity

- Provide headroom for future business growth and new solution capabilities

What is Intel Virtualization Technology (VT-x)?

Intel Virtualization Technology (VT-x) is a hardware enhancement for the x86 IA-32 and Intel 64 architectures that allows CPUs to essentially split their computation into partitions, which are then distributed to several virtual machines or operating systems functioning on the same server.

For a refresher on virtualization, check out IBM’s awesome virtualization overview.

Thanks to Intel VT-x, a hardware platform can function as several operating systems or virtual machines concurrently, with multiple applications running on each.

According to an Intel white paper on VT-x, the technology “helps consolidate multiple environments into a single server, workstation or PC,” with the primary benefit being the need of “fewer systems to complete the same tasks.”

Intel had a few goals in mind with the release of VT-x: reduce VMM complexity; enhance reliability, security and protection; improve functionality; and increase performance.

The end-user benefits of Intel VT-x include:

- Lower support and maintenance cost

- Lower total cost of ownership (TCO)

- Lower platform, energy, cooling maintenance and inventory costs

- Increased functionality

- Mixed and varied operating systems

- More power virtualization solutions

A deep dive on the myriad benefits of Intel VT-x can be found in Intel’s The Advantages of Using Virtualization Technology in the Enterprise.

What is Intel Virtualization Technology for Directed I/O (VT-d)?

You can think of Intel’s Virtualization Technology for Directed I/O (VT-d) as an extension of Intel VT.

Intel VT-d adds support for input/output device virtualization, thereby improving the performance of I/O devices in virtualized environments.

“Intel VT-d can help VMMs improve reliability and security by isolating these devices to protected domains. By controlling access of devices to specific memory ranges, end-to-end (VM to device) isolation can be achieved by the VMM. This helps improve security, reliability and availability.”

- Intel, Intel Virtualization Technology for Directed I/O (VT-d): Enhancing Intel Platforms for Efficient Virtualization of I/O Devices

What is Intel VT-x with Extended Page Tables (EPT)?

Extended Page Tables (EPT) is Intel’s version of Second Level Address Translation (SLAT) for its VT-x advanced technology.

According to Intel, via the utilization of the memory management unit (MMU), EPT “provides acceleration for memory-intensive virtualized applications.”

The use of virtualization technology comes with additional overhead costs, however, and that’s exactly what Intel hoped to help mitigate with EPT.

A study conducted by VMware, a cloud infrastructure and digital workspace technology company, concluded that EPT-enabled systems can improve performance compared to using shadow paging for MMU virtualization.

“EPT provides performance gains of up to 48 percent for MMU-intensive benchmarks and up to 600 percent for MMU-intensive microbenchmarks.”

- Excerpt from VMware study

What are Intel Transactional Synchronization Extensions?

Intel Transactional Synchronization Extensions (TSX) is an addition to Intel’s x86 instruction set architecture. It adds support for hardware transactional memory to improve the performance of multi-threaded applications. The technology was introduced with Intel’s Fourth Generation Core Processors and is utilized by Xeon processors as well.

According to a paper published by Intel Labs and the Intel Architecture Development Group, TSX allows processors to determine whether threads need to serialize, or convert into a storable format, through lock-protected critical sections.

The paper, written by Richard Yoo, Christopher Hughes, Konrad Lai and Ravi Rajwar, evaluated the initial implementation of Intel TSX using a set of high-performance computing workloads and observed subsequent performance improvements. The researchers noted an average speed up of 1.41x and 1.31x when applied to a parallel user-level TCP/IP stack.

Looking for more information on Intel TSX? Check out the Intel TSX Overview published by Intel’s Developer Zone.

What is Intel 64?

Intel 64 is the company’s 64-bit computing upgrade to its 32-bit x86 instruction set architecture. According to PCMag, Xeon processors were the first CPUs to incorporate Intel 64.

Intel 64 stands in contrast to the IA-32 architecture, which powers 32-bit operating systems, but it’s helpful to think of Intel 64 as an enhancement of IA-32, rather than its own separate architecture.

According to Intel, 64-bit computing improves performance by allowing servers and workstations to address beyond 4GB of virtual and physical memory, which is the maximum amount that 32-bit processors can address. In turn, the processor can handle more data at once, which improves system performance and reduces latency.

According to Digital Trends, a 64-bit processor can access over four billion times the physical memory of a 32-bit processor. Another advantage of 64-bit processors is that they can support both 32-bit and 64-bit applications, whereas 32-bit processors can only support the former.

Overall, a 64-bit Xeon processor improves multitasking and supports larger file sizes through its better support of data allocation. The architecture itself allows systems to address up to 1TB of memory when combined with the appropriate 64-bit operating system. Otherwise, the performance improvement benefits that the 64-bit computing architecture offers essentially go unnoticed.

What are Instruction Set Extensions?

Intel’s instruction set extensions are a collection of additional instructions, such as Intel SSE4.2 and Intel AVX, used to boost the performance of a CPU.

Intel lists six instruction set extensions on its website:

- Streaming SIMD Extensions (Intel SSE)

- Streaming SIMD Extensions 2 (Intel SSE2)

- Streaming SIMD Extensions 3 (Intel SSE3)

- Streaming SIMD Extensions 4 (Intel SSE4)

- Advanced Vector Extensions (Intel AVX)

- Advanced Vector Extensions 512 (Intel AVX-512)

You can learn more about the purpose of each instruction set extension in Intel’s Instruction Set Extensions Technology support article.

What is the # of AVX-512 FMA Units?

Intel says its AVX-512 instruction set extension can handle the most demanding computational tasks because of the set’s 512-bit vectors. These resource-intensive tasks, according to Intel, include tasks associated with scientific simulations, financial analytics, AI and deep learning, cryptography and much more.

Applications using the AVX-512 instruction set extension can have up to two Fused Multiply Add (FMA) units and double the width of those same units. An FMA operation is performed in one step and can therefore increase computational speed and accuracy.

See Intel’s AVX-512 product page for more information on FMAs and how AVX-512 can accelerate workloads.

What is Enhanced Intel SpeedStep Technology?

Enhanced Intel SpeedStep Technology (EIST) is centered around the management of power consumption and heat production.

In a nutshell, EIST is a two-fold solution: deliver high performance to mobile computing systems while also saving power. It does so via an automatic, separatory adjustment of CPU voltage and core frequency, as well as through clock partitioning and recovery.

Basically, EIST manipulates the CPU’s clock speed so that it decreases during minimal system activity and increases during considerable system activity, thereby saving power and reducing heat production when the server or workstation is running what basically amount to background applications.

What is the Intel Volume Management Device?

Intel’s Volume Management Device (VMD) is all about hot-swappable storage and storage management. The technology allows for the removal and subsequent service of PCIe-based NVMe SSDs without the powering down of the server or workstation.

The main goal of Intel VMD is to improve uptime and serviceability for servers and workstations.

Shutting down an entire server system just to service or replace one or more NVMe SSDs can cause significant downtime, which can have dire consequences for mission-critical systems and operations. Intel VMD helps to prevent these consequences right from a Xeon processor.

But what’s the point of having hot-swap capability if you don’t know which NVMe SSD to remove? Well, thankfully, Intel VMD actually supports the activation of a status LED on NVMe SSDs, which helps data center administrators identify the SSD in need of servicing.

What are Intel AES New Instructions?

Intel AES New Instructions (AES-NI) is essentially a data encryption acceleration technology. By building upon the widely used Advanced Encryption Standard (AES) algorithm with seven additional instructions, AES-NI provides faster data encryption, and in turn, improved security.

Intel cites two primary benefits of AES-NI:

- Provides significant speedup of the AES algorithm

- Provides additional security via hardware-based encryption and decryption without the need for software lookup tables

For more on AES-NI and AES, read Intel’s AES-NI development article.

What is Intel Trusted Execution Technology?

Intel Trusted Execution Technology (TXT) is a security technology designed to address software attacks at the hardware level.

According to an Intel TXT white paper, the TXT is “specifically designed to harden platforms from the emerging threats of hypervisor attacks, BIOS or other firmware attacks, malicious root kit installations or other software-based attacks.” It serves as a complement to other protective measures, such as anti-virus software.

Intel TXT uses a software verification process called a Measured Launch Environment (MLE) and boot process isolation for protection.

What is Execute Disable Bit?

Intel’s Execute Disable Bit (EDB) is a hardware-based technology that helps protect systems from viruses and malicious code.

Essentially, EDB enables the CPU to label which areas of memory can and cannot execute application code, thereby stopping computer worms and viruses in their tracks as they attempt to execute their own code during a buffer overflow attack.

EDB is an important technology for customers looking to decrease their system’s vulnerability to viruses at the hardware level and can even be coupled with additional anti-virus software for added protection.

Viruses and other malicious computer programs or software can cause significant downtime, data breaches, data loss and other disastrous consequences for military, industrial and commercial operations, where access to consistent, protected data is necessary.

What is Intel Run Sure Technology?

Xeon Scalable processors can make use of Intel’s Run Sure Technology (RST), which provides additional reliability, availability and serviceability (RAS) features and memory error corrections that help increase server uptime and protect critical data.

According to Intel, through its Resilient System and Resilient Memory technologies, Intel RST combines a server’s processor, firmware and software resources to help “diagnose fatal errors, contain faults and automatically recover” and ensure data integrity within the memory subsystem. As a result, servers remain up and running for a longer period, which minimizes service and maintenance expenses associated with downtime.

Intel lists four key applications that would benefit from RST:

- Business processing

- In-memory

- Data analysis or mining

- Data warehousing or data mart

For more information on RST, visit Intel’s RST website page.

What is Mode-Based Execution Control?

Intel’s Mode-Based Execution Control (MBE), unique to the Xeon Scalable processor family, is a security enhancement of Extended Page Tables that functions as an additional safeguard against malware attacks.

According to Intel, MBE “provides an extra layer of protection from malware attacks in a virtualized environment by enabling hypervisors to more reliably verify and enforce the integrity of kernel-level code.”

Intel’s Xeon Processor Scalable Family Technical Overview says that MBE provides additional refinement within the Extended Page Tables (EPT) by turning the Execute Enable (X) permission bit into two options:

- XU for user pages

- XS for supervisor pages

More information on MBE and the Xeon processor families supporting it can be found in Intel’s Xeon Scalable Platform Product Brief.

Photo: Trenton Systems' dual Xeon Motherboard, which utilizes Silver & Gold Series Scalable processors and the numerous advanced technologies listed in this blog post. Learn more about our Xeon Scalable processor boards and motherboards here.

Conclusion

We hope that this comprehensive overview of Intel’s advanced technologies provided you with more insight into how each of these technologies benefit your program or application.

Click here to return to the Table of Contents.

Our rugged servers can support each of Intel's advanced technologies.

After all, Trenton Systems allows customers to choose from an extensive line of Xeon Scalable processors, which incorporate the very advanced technologies listed in this blog post.

In conjunction with strict military and industrial testing standards, made-in-USA components, and a nearly 15-year-long life cycle, a Trenton Systems rugged server is a hardened, cybersecure, high-quality force to be reckoned with.

Let us know how we can help you.

Share this

- High-performance computers (42)

- Military computers (38)

- Rugged computers (32)

- Cybersecurity (25)

- Industrial computers (25)

- Military servers (24)

- MIL-SPEC (20)

- Rugged servers (19)

- Press Release (17)

- Industrial servers (16)

- MIL-STD-810 (16)

- 5G Technology (14)

- Intel (13)

- Rack mount servers (12)

- processing (12)

- Computer hardware (11)

- Edge computing (11)

- Rugged workstations (11)

- Made in USA (10)

- Partnerships (9)

- Rugged computing (9)

- Sales, Marketing, and Business Development (9)

- Trenton Systems (9)

- networking (9)

- Peripheral Component Interconnect Express (PCIe) (7)

- Encryption (6)

- Federal Information Processing Standards (FIPS) (6)

- GPUs (6)

- IPU (6)

- Joint All-Domain Command and Control (JADC2) (6)

- Server motherboards (6)

- artificial intelligence (6)

- Computer stress tests (5)

- Cross domain solutions (5)

- Mission-critical servers (5)

- Rugged mini PCs (5)

- AI (4)

- BIOS (4)

- CPU (4)

- Defense (4)

- Military primes (4)

- Mission-critical systems (4)

- Platform Firmware Resilience (PFR) (4)

- Rugged blade servers (4)

- containerization (4)

- data protection (4)

- virtualization (4)

- Counterfeit electronic parts (3)

- DO-160 (3)

- Edge servers (3)

- Firmware (3)

- HPC (3)

- Just a Bunch of Disks (JBOD) (3)

- Leadership (3)

- Navy (3)

- O-RAN (3)

- RAID (3)

- RAM (3)

- Revision control (3)

- Ruggedization (3)

- SATCOM (3)

- Storage servers (3)

- Supply chain (3)

- Tactical Advanced Computer (TAC) (3)

- Wide-temp computers (3)

- computers made in the USA (3)

- data transfer (3)

- deep learning (3)

- embedded computers (3)

- embedded systems (3)

- firmware security (3)

- machine learning (3)

- Automatic test equipment (ATE) (2)

- C6ISR (2)

- COTS (2)

- COVID-19 (2)

- CPUs (2)

- Compliance (2)

- Compute Express Link (CXL) (2)

- Computer networking (2)

- Controlled Unclassified Information (CUI) (2)

- DDR (2)

- DDR4 (2)

- DPU (2)

- Dual CPU motherboards (2)

- EW (2)

- I/O (2)

- Military standards (2)

- NVIDIA (2)

- NVMe SSDs (2)

- PCIe (2)

- PCIe 4.0 (2)

- PCIe 5.0 (2)

- RAN (2)

- SIGINT (2)

- SWaP-C (2)

- Software Guard Extensions (SGX) (2)

- Submarines (2)

- Supply chain security (2)

- TAA compliance (2)

- airborne (2)

- as9100d (2)

- chassis (2)

- data diode (2)

- end-to-end solution (2)

- hardware security (2)

- hardware virtualization (2)

- integrated combat system (2)

- manufacturing reps (2)

- memory (2)

- mission computers (2)

- private 5G (2)

- protection (2)

- secure by design (2)

- small form factor (2)

- software security (2)

- vRAN (2)

- zero trust (2)

- zero trust architecture (2)

- 3U BAM Server (1)

- 4G (1)

- 4U (1)

- 5G Frequencies (1)

- 5G Frequency Bands (1)

- AI/ML/DL (1)

- Access CDS (1)

- Aegis Combat System (1)

- Armed Forces (1)

- Asymmetric encryption (1)

- C-RAN (1)

- COMINT (1)

- Cloud-based CDS (1)

- Coast Guard (1)

- Compliance testing (1)

- Computer life cycle (1)

- Containers (1)

- D-RAN (1)

- DART (1)

- DDR5 (1)

- DMEA (1)

- Data Center Modular Hardware System (DC-MHS) (1)

- Data Plane Development Kit (DPDK) (1)

- Defense Advanced Research Projects (DARP) (1)

- ELINT (1)

- EMI (1)

- EO/IR (1)

- Electromagnetic Interference (1)

- Electronic Warfare (EW) (1)

- FIPS 140-2 (1)

- FIPS 140-3 (1)

- Field Programmable Gate Array (FPGA) (1)

- Ground Control Stations (GCS) (1)

- Hardware-based CDS (1)

- Hybrid CDS (1)

- IES.5G (1)

- ION Mini PC (1)

- IP Ratings (1)

- IPMI (1)

- Industrial Internet of Things (IIoT) (1)

- Industry news (1)

- Integrated Base Defense (IBD) (1)

- LAN ports (1)

- LTE (1)

- Life cycle management (1)

- Lockheed Martin (1)

- MIL-S-901 (1)

- MIL-STD-167-1 (1)

- MIL-STD-461 (1)

- MIL-STD-464 (1)

- MOSA (1)

- Multi-Access Edge Computing (1)

- NASA (1)

- NIC (1)

- NIC Card (1)

- NVMe (1)

- O-RAN compliant (1)

- Oil and Gas (1)

- Open Compute Project (OCP) (1)

- OpenRAN (1)

- P4 (1)

- PCIe card (1)

- PCIe lane (1)

- PCIe slot (1)

- Precision timestamping (1)

- Product life cycle (1)

- ROM (1)

- Raytheon (1)

- Remotely piloted aircraft (RPA) (1)

- Rugged computing glossary (1)

- SEDs (1)

- SIM Card (1)

- Secure boot (1)

- Sensor Open Systems Architecture (SOSA) (1)

- Small form-factor pluggable (SFP) (1)

- Smart Edge (1)

- Smart NIC (1)

- SmartNIC (1)

- Software-based CDS (1)

- Symmetric encryption (1)

- System hardening (1)

- System hardening best practices (1)

- TME (1)

- Tech Partners (1)

- Total Memory Encryption (TME) (1)

- Transfer CDS (1)

- USB ports (1)

- VMEbus International Trade Association (VITA) (1)

- Vertical Lift Consortium (VLC) (1)

- Virtual machines (1)

- What are embedded systems? (1)

- Wired access backhaul (1)

- Wireless access backhaul (1)

- accredidation (1)

- aerospace (1)

- air gaps (1)

- airborne computers (1)

- asteroid (1)

- authentication (1)

- autonomous (1)

- certification (1)

- cognitive software-defined radios (CDRS) (1)

- command and control (C2) (1)

- communications (1)

- cores (1)

- custom (1)

- customer service (1)

- customer support (1)

- data linking (1)

- data recording (1)

- ethernet (1)

- full disk encryption (1)

- hardware monitoring (1)

- heat sink (1)

- hypervisor (1)

- in-house technical support (1)

- input (1)

- integrated edge solution (1)

- international business (1)

- licensed spectrum (1)

- liquid cooling (1)

- mCOTS (1)

- microelectronics (1)

- missile defense (1)

- mixed criticality (1)

- moving (1)

- multi-factor authentication (1)

- network slicing (1)

- neural networks (1)

- new headquarters (1)

- next generation interceptor (1)

- non-volatile memory (1)

- operating system (1)

- output (1)

- outsourced technical support (1)

- post-boot (1)

- pre-boot (1)

- private networks (1)

- public networks (1)

- radio access network (RAN) (1)

- reconnaissance (1)

- rugged memory (1)

- secure flash (1)

- security (1)

- self-encrypting drives (SEDs) (1)

- sff (1)

- software (1)

- software-defined radios (SDRs) (1)

- speeds and feeds (1)

- standalone (1)

- storage (1)

- systems (1)

- tactical wide area networks (1)

- technical support (1)

- technology (1)

- third-party motherboards (1)

- troposcatter communication (1)

- unlicensed spectrum (1)

- volatile memory (1)

- vpx (1)

- zero trust network (1)

- January 2025 (1)

- November 2024 (1)

- October 2024 (1)

- August 2024 (1)

- July 2024 (1)

- May 2024 (1)

- April 2024 (3)

- February 2024 (1)

- November 2023 (1)

- October 2023 (1)

- July 2023 (1)

- June 2023 (3)

- May 2023 (7)

- April 2023 (5)

- March 2023 (7)

- December 2022 (2)

- November 2022 (6)

- October 2022 (7)

- September 2022 (8)

- August 2022 (3)

- July 2022 (4)

- June 2022 (13)

- May 2022 (10)

- April 2022 (4)

- March 2022 (11)

- February 2022 (4)

- January 2022 (4)

- December 2021 (1)

- November 2021 (4)

- September 2021 (2)

- August 2021 (1)

- July 2021 (2)

- June 2021 (3)

- May 2021 (4)

- April 2021 (3)

- March 2021 (3)

- February 2021 (8)

- January 2021 (4)

- December 2020 (5)

- November 2020 (5)

- October 2020 (4)

- September 2020 (4)

- August 2020 (6)

- July 2020 (9)

- June 2020 (11)

- May 2020 (13)

- April 2020 (8)

- February 2020 (1)

- January 2020 (1)

- October 2019 (1)

- August 2019 (2)

- July 2019 (2)

- March 2019 (1)

- January 2019 (2)

- December 2018 (1)

- November 2018 (2)

- October 2018 (5)

- September 2018 (3)

- July 2018 (1)

- April 2018 (2)

- March 2018 (1)

- February 2018 (9)

- January 2018 (27)

- December 2017 (1)

- November 2017 (2)

- October 2017 (3)

/Trenton%20Systems%20Circular%20Logo-3.png?width=50&height=50&name=Trenton%20Systems%20Circular%20Logo-3.png)

No Comments Yet

Let us know what you think