Share this

Virtualization vs. Containerization: What's the Difference?

by Christopher Trick on Mar 24, 2023 2:16:53 PM

As systems across the commercial, military, and critical infrastructure sectors become increasingly software-based, virtualization and containerization will play a key role in their implementation.

In this blog, you will learn more about the differences between virtualization and containerization, pros and cons of each, and how they work together.

What is virtualization, and how does it work?

Virtualization is a technology that enables the creation of virtual versions of physical resources--such as computer systems, servers, storage devices, and network resources--called virtual machines (VMs).

The aim of virtualization is to provide a layer of abstraction between the physical hardware and the software that interacts with it. This abstraction enables the creation of multiple virtual resources that can run independently and isolated from each other, even on the same physical hardware.

Virtualization works by using a software layer called a hypervisor that runs on top of a host operating system and acts as an intermediary between the physical hardware and the virtual resources. The hypervisor creates the virtual machines and manages resource allocation to each virtual machine depending upon application needs.

These virtual machines contain virtual versions of the underlying physical hardware components, such as CPU, memory, storage, and network interfaces. Each virtual machines has its own operating system, or guest operating system.

Applications running on a virtual machine can interact with these virtual hardware components, but they are isolated from the underlying physical hardware and other virtual resources.

What are the pros and cons of virtualization?

Here are the pros of virtualization:

- Cost savings and compute density: Virtualization can help reduce hardware costs and enhance compute density, as multiple virtual machines can run on a single physical machine.

- Improved resource allocation: Virtualization allows for better resource allocation and management, enabling administrators to allocate resources on demand and ensure that applications have the resources they need to run optimally. A hypervisor allocates specific amounts of CPU, memory, and storage to each virtual machine.

- Portability: Virtualization enables virtual machines to be easily moved from one physical machine to another and run in a variety of environments.

- Security: Virtualization can help increase security by isolating applications and data, reducing the risk of data breaches. If virtual machines are isolated and not connected, an attack on one virtual machine should not spread to another.

- Preservation of legacy hardware: Say a critical application can only run on hardware that is no longer available, virtualization allows users to create a virtual machine to replicate the old hardware and run the application.

- Multiple operating systems can be run on the same server: After virtual machines are created, users can install a different operating system on each one. This means that different applications requiring different operating systems can run on each virtual machine.

- Easy maintenance: A virtual machine can be taken for maintenance while not affecting the rest of the system that is still in operation.

- Redundancy: Copies of the same virtual machine can be made and deployed in case of malfunction, ensuring continuous operation and reducing downtime.

Here are the cons of virtualization:

- Performance overhead: Virtualization can introduce performance overhead, as virtual machines must share resources with each other. Each virtual machine requires multiple resources to start up, which may lead to slower performance.

- Complexity: Virtualization can add complexity to the IT environment, requiring additional management tools and processes.

- Security risks: Virtualization can introduce new security risks, such as the possibility of data breaches or unauthorized access to virtual machines. If virtual machines are connected, an attack on a single virtual machine can spread to others. Additionally, if a hacker accesses the host system, then all virtual machines can be compromised.

- Potentially increased hardware requirements: Depending on the number of virtual machines, virtualization can also increase hardware requirements, and therefore costs, as more processing power and memory may be needed to support multiple virtual machines on a single physical machine.

Virtualization is a technology that enables the creation of virtual versions of physical resources--such as computer systems, servers, storage devices, and network resources--called virtual machines (VMs).

What is containerization, and how does it work?

Containerization is a technology for packaging and deploying software applications. It allows developers to package an application and its dependencies into units called containers, which can be run consistently on any system that has a container runtime installed and a compatible base operating system.

Containerization virtualizes a host operating system and shares the operating system's kernel--the core component of an operating system. This means that containers can run isolated from the host system and from each other, while sharing the host's kernel and only requiring the use of one operating system.

The containerization process also involves creating an image of the application and its dependencies, and then packaging this image into a container. The image is created by defining the application's environment, dependencies, and configuration in a file called a Dockerfile, which specifies how the container should be built.

Once the Dockerfile is created, the image can be built using a containerization platform like Docker, which creates a read-only template that can be used to create multiple containers.

Containers can run on a piece of hardware by themselves or inside virtual machines.

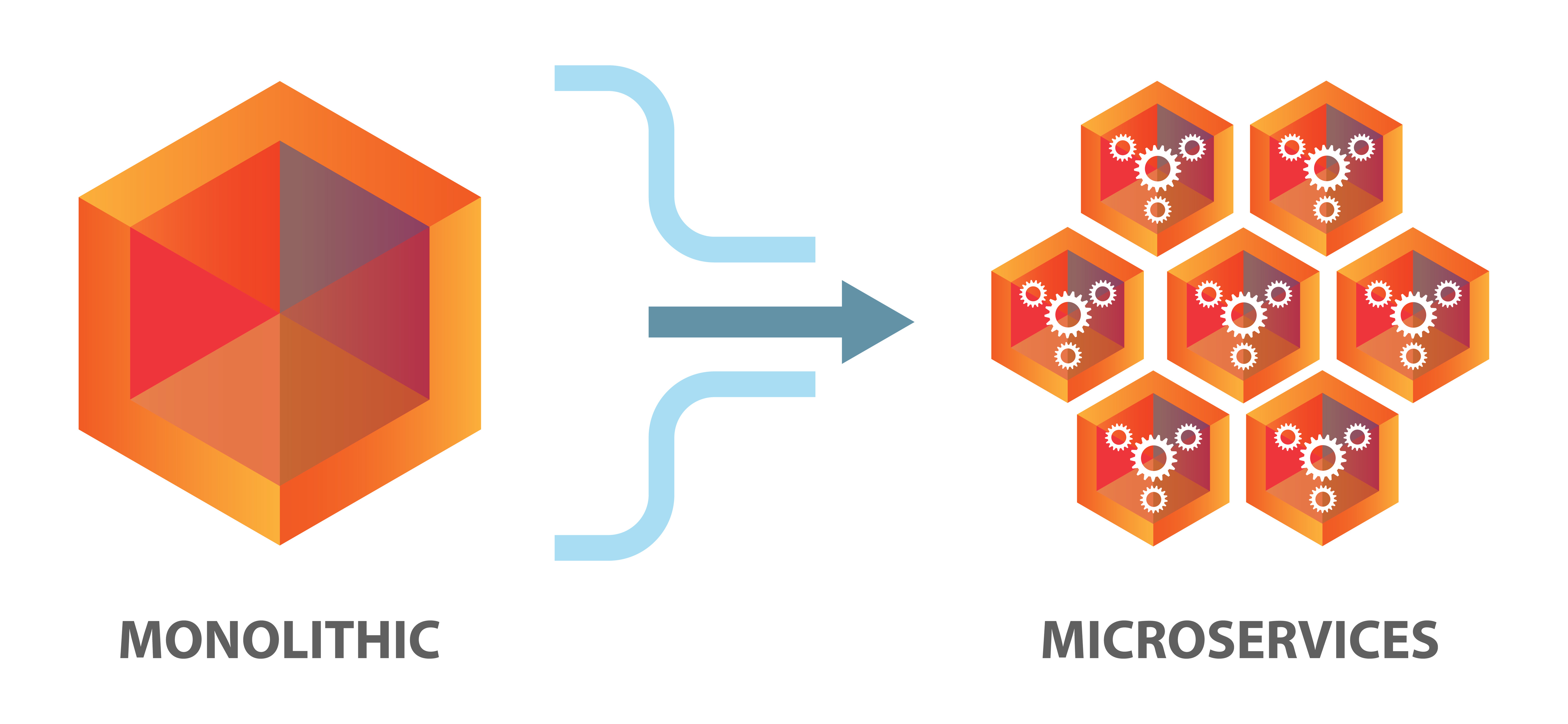

Inside the containers are microservices, individual functions of an application. So instead of a monolithic, compact application, an entire application can be spread out over multiple containers depending upon how many functions it has.

Microservices can also run on a piece of hardware or virtual machine as uncontainerized portions of an application, though that can lead to complications if the appropriate libraries and dependencies are not installed along with them.

A container orchestration tool--Docker and Kubernetes being the most popular ones--runs on a host operating system and provides the necessary infrastructure to create and run the containers, including isolated networks, storage, and resources like CPU and memory.

This means that containers can run isolated from the host system and from each other, while sharing the host's kernel.

It is important to note, however, that unlike virtual machines, containers can only run on systems with the same base operating system.

For example, an application may need to run on Windows 7, but once it is containerized, it can run on Windows 10, as the base operating system is the same. The application would not, however, be able to run on a Linux operating system.

What are the pros and cons of containerization?

Here are the pros of containerization:

- Portability: Containers can run anywhere, on any infrastructure, making it easier to move applications between development, testing, and production environments.

- Isolation: Containers provide a level of isolation between applications as well as application functions, making it easier to manage dependencies and avoid conflicts between different applications and functions.

- Scalability: Containers can be easily scaled up or down to meet changing demand, making it easier to modify containers to sustain evolving applications. Since the microservices comprising the application are spread out over different containers, updating just a single features does not require replacing the entire application, and updates can be made in seconds.

- Preservation of legacy software: Say a critical application can only run hardware that is no longer available, containerization packages up the application and its dependencies into packages that can run on any infrastructure.

- Consistency: Containers ensure that applications run consistently across different environments, reducing the risk of configuration drift and making it easier to manage application deployments.

- Resource Efficiency: Containers are lightweight and require fewer resources compared to virtual machines, sharing only the operating system's kernel and using less memory, CPU, and storage. This means that even more containers than virtual machines can run on a single server, and containers take much less time to start up than virtual machines, too.

- Size: Containers are much smaller than virtual machines, meaning that even more containers than virtual machines can run on a single server. This also means that users potentially need even less hardware to run more containers, further reducing costs.

- Easy maintenance: A container can be taken for maintenance while not affecting the rest of the system that is still in operation.

- Redundancy: Copies of the same container can be made and deployed in case of malfunction, ensuring continuous operation and reducing downtime.

Here are the cons of containerization:

- Security: Containers share the host operating system's kernel and can potentially access host system resources, which could lead to security vulnerabilities if not properly managed. A hacker could access a host operating system, compromising all containers. Additionally, if all containers are connected, an attack on one container can compromise all containers.

- Management overhead: Containers are typically deployed and managed at scale, which can create management overhead as the number of containers grows.

- Persistent data storage: Containers are designed to be stateless and ephemeral, which means they do not typically include any persistent storage. This can be a challenge when trying to store and manage data in a containerized environment.

- Poorer resource allocation: Since containers share the same kernel and operating system with the host machine, standard container orchestration tools cannot allocate resources such as CPU and memory on a per-container basis. However, container orchestration tools such as Docker and Kubernetes provide more advanced resource allocation and management capabilities, enabling users to allocate resources to individual containers based on application needs.

Containerization is a technology for packaging and deploying software applications. It allows developers to package an application and its dependencies into a single unit, called a container, which can be run consistently on any system that has a container runtime installed.

How do virtualization and containerization work together?

When virtualization and containerization are used together, the virtual machines can host multiple containers that run different applications or services, provided the appropriate container orchestration software is installed on the guest operating system of the virtual machine.

This allows for better resource utilization, as multiple containers can share the resources of a single virtual machine, and improved flexibility, as containers can be easily moved between virtual machines or even across physical hosts.

Virtualization and containerization also enable multiple isolated environments to run on the same physical hardware, which is useful for security and compliance requirements.

By using both technologies together, organizations can increase their ability to manage and scale their applications and infrastructure, providing a more flexible and efficient computing environment.

Other benefits of running containers inside virtual machines, sometimes referred to as nested virtualization, include:

- Improved isolation: Running containers inside virtual machines provides an extra layer of isolation and security. The container is isolated from the host operating system, and the virtual machine provides an additional layer of isolation, which can be helpful in environments with strict security requirements.

- Compatibility: Running containers inside virtual machines can help overcome compatibility issues between the container and the host operating system. Some containerized applications may require specific dependencies or configurations that are not compatible with the host operating system. By running the container inside a virtual machine with the required dependencies and configurations, including the appropriate operating system, compatibility issues can be avoided.

- Resource management: Running containers inside virtual machines can help with resource management. Virtual machines allow for granular control over resource allocation, such as CPU, memory, and storage. This can help prevent resource contention between containers and other applications running on the host operating system.

- Flexibility: Running containers inside virtual machines provides additional flexibility for application deployment. This is particularly true in hybrid cloud environments, where workloads may need to be moved between different cloud providers or on-premise data centers. By packaging containers inside virtual machines, workloads can be moved more easily between different environments without requiring significant changes to the underlying infrastructure.

Overall, running containers inside virtual machines provides an additional layer of isolation and flexibility, which can be beneficial in certain environments. However, it does add some additional complexity and overhead, so it may not be the best approach for all scenarios.

How does Trenton Systems come into play?

At Trenton, our COTS, SWaP-C-optimized high-performance computers are designed with our customers to ensure they receive a solution that best fits their application needs, including virtualization and containerization.

With modularity at the hardware and software level, our end-to-end solutions deliver an enhanced out-of-box experience, maximum scalability, and hardware-based protection of critical workloads at the tactical edge.

Our systems' virtualization and containerization capabilities are further enhanced by PCIe 5.0, CXL, and 4th Gen Intel® Xeon® Scalable Processors to provide the speeds and feeds required for a variety of applications across the modern, multi-domain battlespace.

Additionally, we ensure that we incorporate components free of vulnerabilities from hostile nations, and we protect our systems from the most sophisticated of cyberattacks with a tight grip on our supply chain and multi-layer cybersecurity.

Interested in learning more about our virtualization and containerization capabilities? Just reach out to us anytime here.

Team Trenton is at your service. 😎

Share this

- High-performance computers (42)

- Military computers (38)

- Rugged computers (32)

- Cybersecurity (25)

- Industrial computers (25)

- Military servers (24)

- MIL-SPEC (20)

- Rugged servers (19)

- Press Release (17)

- Industrial servers (16)

- MIL-STD-810 (16)

- 5G Technology (14)

- Intel (13)

- Rack mount servers (12)

- processing (12)

- Computer hardware (11)

- Edge computing (11)

- Rugged workstations (11)

- Made in USA (10)

- Partnerships (9)

- Rugged computing (9)

- Sales, Marketing, and Business Development (9)

- Trenton Systems (9)

- networking (9)

- Peripheral Component Interconnect Express (PCIe) (7)

- Encryption (6)

- Federal Information Processing Standards (FIPS) (6)

- GPUs (6)

- IPU (6)

- Joint All-Domain Command and Control (JADC2) (6)

- Server motherboards (6)

- artificial intelligence (6)

- Computer stress tests (5)

- Cross domain solutions (5)

- Mission-critical servers (5)

- Rugged mini PCs (5)

- AI (4)

- BIOS (4)

- CPU (4)

- Defense (4)

- Military primes (4)

- Mission-critical systems (4)

- Platform Firmware Resilience (PFR) (4)

- Rugged blade servers (4)

- containerization (4)

- data protection (4)

- virtualization (4)

- Counterfeit electronic parts (3)

- DO-160 (3)

- Edge servers (3)

- Firmware (3)

- HPC (3)

- Just a Bunch of Disks (JBOD) (3)

- Leadership (3)

- Navy (3)

- O-RAN (3)

- RAID (3)

- RAM (3)

- Revision control (3)

- Ruggedization (3)

- SATCOM (3)

- Storage servers (3)

- Supply chain (3)

- Tactical Advanced Computer (TAC) (3)

- Wide-temp computers (3)

- computers made in the USA (3)

- data transfer (3)

- deep learning (3)

- embedded computers (3)

- embedded systems (3)

- firmware security (3)

- machine learning (3)

- Automatic test equipment (ATE) (2)

- C6ISR (2)

- COTS (2)

- COVID-19 (2)

- CPUs (2)

- Compliance (2)

- Compute Express Link (CXL) (2)

- Computer networking (2)

- Controlled Unclassified Information (CUI) (2)

- DDR (2)

- DDR4 (2)

- DPU (2)

- Dual CPU motherboards (2)

- EW (2)

- I/O (2)

- Military standards (2)

- NVIDIA (2)

- NVMe SSDs (2)

- PCIe (2)

- PCIe 4.0 (2)

- PCIe 5.0 (2)

- RAN (2)

- SIGINT (2)

- SWaP-C (2)

- Software Guard Extensions (SGX) (2)

- Submarines (2)

- Supply chain security (2)

- TAA compliance (2)

- airborne (2)

- as9100d (2)

- chassis (2)

- data diode (2)

- end-to-end solution (2)

- hardware security (2)

- hardware virtualization (2)

- integrated combat system (2)

- manufacturing reps (2)

- memory (2)

- mission computers (2)

- private 5G (2)

- protection (2)

- secure by design (2)

- small form factor (2)

- software security (2)

- vRAN (2)

- zero trust (2)

- zero trust architecture (2)

- 3U BAM Server (1)

- 4G (1)

- 4U (1)

- 5G Frequencies (1)

- 5G Frequency Bands (1)

- AI/ML/DL (1)

- Access CDS (1)

- Aegis Combat System (1)

- Armed Forces (1)

- Asymmetric encryption (1)

- C-RAN (1)

- COMINT (1)

- Cloud-based CDS (1)

- Coast Guard (1)

- Compliance testing (1)

- Computer life cycle (1)

- Containers (1)

- D-RAN (1)

- DART (1)

- DDR5 (1)

- DMEA (1)

- Data Center Modular Hardware System (DC-MHS) (1)

- Data Plane Development Kit (DPDK) (1)

- Defense Advanced Research Projects (DARP) (1)

- ELINT (1)

- EMI (1)

- EO/IR (1)

- Electromagnetic Interference (1)

- Electronic Warfare (EW) (1)

- FIPS 140-2 (1)

- FIPS 140-3 (1)

- Field Programmable Gate Array (FPGA) (1)

- Ground Control Stations (GCS) (1)

- Hardware-based CDS (1)

- Hybrid CDS (1)

- IES.5G (1)

- ION Mini PC (1)

- IP Ratings (1)

- IPMI (1)

- Industrial Internet of Things (IIoT) (1)

- Industry news (1)

- Integrated Base Defense (IBD) (1)

- LAN ports (1)

- LTE (1)

- Life cycle management (1)

- Lockheed Martin (1)

- MIL-S-901 (1)

- MIL-STD-167-1 (1)

- MIL-STD-461 (1)

- MIL-STD-464 (1)

- MOSA (1)

- Multi-Access Edge Computing (1)

- NASA (1)

- NIC (1)

- NIC Card (1)

- NVMe (1)

- O-RAN compliant (1)

- Oil and Gas (1)

- Open Compute Project (OCP) (1)

- OpenRAN (1)

- P4 (1)

- PCIe card (1)

- PCIe lane (1)

- PCIe slot (1)

- Precision timestamping (1)

- Product life cycle (1)

- ROM (1)

- Raytheon (1)

- Remotely piloted aircraft (RPA) (1)

- Rugged computing glossary (1)

- SEDs (1)

- SIM Card (1)

- Secure boot (1)

- Sensor Open Systems Architecture (SOSA) (1)

- Small form-factor pluggable (SFP) (1)

- Smart Edge (1)

- Smart NIC (1)

- SmartNIC (1)

- Software-based CDS (1)

- Symmetric encryption (1)

- System hardening (1)

- System hardening best practices (1)

- TME (1)

- Tech Partners (1)

- Total Memory Encryption (TME) (1)

- Transfer CDS (1)

- USB ports (1)

- VMEbus International Trade Association (VITA) (1)

- Vertical Lift Consortium (VLC) (1)

- Virtual machines (1)

- What are embedded systems? (1)

- Wired access backhaul (1)

- Wireless access backhaul (1)

- accredidation (1)

- aerospace (1)

- air gaps (1)

- airborne computers (1)

- asteroid (1)

- authentication (1)

- autonomous (1)

- certification (1)

- cognitive software-defined radios (CDRS) (1)

- command and control (C2) (1)

- communications (1)

- cores (1)

- custom (1)

- customer service (1)

- customer support (1)

- data linking (1)

- data recording (1)

- ethernet (1)

- full disk encryption (1)

- hardware monitoring (1)

- heat sink (1)

- hypervisor (1)

- in-house technical support (1)

- input (1)

- integrated edge solution (1)

- international business (1)

- licensed spectrum (1)

- liquid cooling (1)

- mCOTS (1)

- microelectronics (1)

- missile defense (1)

- mixed criticality (1)

- moving (1)

- multi-factor authentication (1)

- network slicing (1)

- neural networks (1)

- new headquarters (1)

- next generation interceptor (1)

- non-volatile memory (1)

- operating system (1)

- output (1)

- outsourced technical support (1)

- post-boot (1)

- pre-boot (1)

- private networks (1)

- public networks (1)

- radio access network (RAN) (1)

- reconnaissance (1)

- rugged memory (1)

- secure flash (1)

- security (1)

- self-encrypting drives (SEDs) (1)

- sff (1)

- software (1)

- software-defined radios (SDRs) (1)

- speeds and feeds (1)

- standalone (1)

- storage (1)

- systems (1)

- tactical wide area networks (1)

- technical support (1)

- technology (1)

- third-party motherboards (1)

- troposcatter communication (1)

- unlicensed spectrum (1)

- volatile memory (1)

- vpx (1)

- zero trust network (1)

- January 2025 (1)

- November 2024 (1)

- October 2024 (1)

- August 2024 (1)

- July 2024 (1)

- May 2024 (1)

- April 2024 (3)

- February 2024 (1)

- November 2023 (1)

- October 2023 (1)

- July 2023 (1)

- June 2023 (3)

- May 2023 (7)

- April 2023 (5)

- March 2023 (7)

- December 2022 (2)

- November 2022 (6)

- October 2022 (7)

- September 2022 (8)

- August 2022 (3)

- July 2022 (4)

- June 2022 (13)

- May 2022 (10)

- April 2022 (4)

- March 2022 (11)

- February 2022 (4)

- January 2022 (4)

- December 2021 (1)

- November 2021 (4)

- September 2021 (2)

- August 2021 (1)

- July 2021 (2)

- June 2021 (3)

- May 2021 (4)

- April 2021 (3)

- March 2021 (3)

- February 2021 (8)

- January 2021 (4)

- December 2020 (5)

- November 2020 (5)

- October 2020 (4)

- September 2020 (4)

- August 2020 (6)

- July 2020 (9)

- June 2020 (11)

- May 2020 (13)

- April 2020 (8)

- February 2020 (1)

- January 2020 (1)

- October 2019 (1)

- August 2019 (2)

- July 2019 (2)

- March 2019 (1)

- January 2019 (2)

- December 2018 (1)

- November 2018 (2)

- October 2018 (5)

- September 2018 (3)

- July 2018 (1)

- April 2018 (2)

- March 2018 (1)

- February 2018 (9)

- January 2018 (27)

- December 2017 (1)

- November 2017 (2)

- October 2017 (3)

/Trenton%20Systems%20Circular%20Logo-3.png?width=50&height=50&name=Trenton%20Systems%20Circular%20Logo-3.png)

No Comments Yet

Let us know what you think