Share this

What Is Edge Computing?

by Brett Daniel on Apr 30, 2020 3:49:42 PM

Photo: Edge computing is a major player in the Fourth Industrial Revolution, or industry 4.0, placing computation at or near the data source to reduce latency and provide real-time data processing and insights to enterprises.

Table of Contents

- What is edge computing?

- What is an edge server?

- What are some edge computing examples?

- What are the benefits of edge computing?

- Edge vs. cloud computing: Is the edge replacing the cloud?

Edge Computing Scenario

Imagine that a driver of a military vehicle needs to travel to a nearby outpost to respond to an unexpected attack.

The driver doesn’t know exactly where the outpost is located. He just knows it’s about 15 miles from the military base where he's currently stationed.

Thankfully, the driver’s vehicle is equipped with an Internet of Things (IoT) virtual assistant that provides him with real-time navigation, geographical information and even weather-related updates.

This nifty assistant transmits the driver's requests for information to a distant server at a centralized cloud data center located thousands of miles away.

In turn, the cloud server uses this device-generated data to compute the information the driver requested.

Photo: A data center with multiple rows of fully operational server racks

Normally, this data is relayed back to the virtual assistant almost instantaneously. The assistant then uses it to guide the driver to the nearby outpost, all the while highlighting any obstacles or conditions that may impede his travel or jeopardize his safety.

But there’s a problem this time.

The assistant is silent. Buffering.

Retrieving the requested information is taking longer than usual.

Why?

Because hundreds of other IoT devices at the base – wearables, security cameras, drones, weapons systems, smart speakers and smart appliances – are also transmitting data to the cloud using the same connection, resulting in a network slowdown of nightmarish proportions.

As a result, the driver is experiencing a delay, or latency, in his device’s response time.

And in turn, the driver cannot receive geographical information or directions to the besieged outpost.

At least, not in a timely manner.

So, what’s the solution?

Enter edge computing.

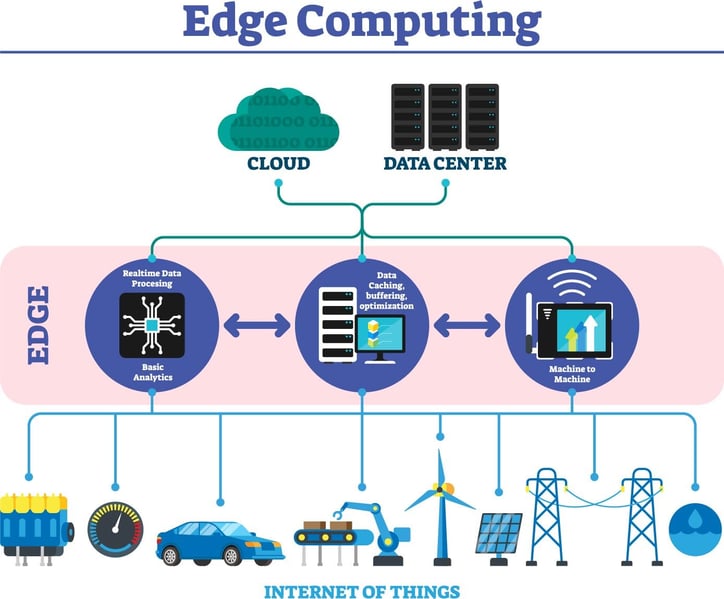

Infographic: An illustration of an edge computing architecture

What is edge computing?

Edge computing is a type of network architecture in which device-generated data is processed at or near the data source.

Edge computing is actualized through integrated technology installed on Internet of Things (IoT) devices or through localized edge servers, which may be located inside smaller cloud-based data centers, known as cloudlets or micro data centers.

You can think of edge computing as an expansion or complement of the cloud computing architecture, in which data is processed or stored at data centers located hundreds of miles, or even continents, away from a given network.

Essentially, edge computing distributes part of a cloud server’s data processing workload to an integrated or localized computer that is proximal to the data-generating device, a process that mitigates latency issues caused by a substantial amount of data transfer to the cloud.

One example of these localized computers is an edge server.

Photo: Edge servers crunch data from IoT devices give enterprises the insights they need to achieve their goals faster and more efficiently.

What is an edge server?

An edge server is a computer that’s located near data-generating devices in an edge computing architecture.

It utilizes the edge computing architecture to reduce latency in data transmission and filter out unimportant or irrelevant data before it is ultimately sent to the cloud for storage.

Edge servers are used as an intermediary between a network and a cloud data center, absorbing a portion of an IoT device’s data processing activities and delivering results in real time.

Examples of edge servers include rugged servers, which are used in the military, manufacturing, and other industries.

The computational offload achieved by the edge computing architecture, in conjunction with the resilience and processing power of a high-performance rugged server, can make for quite a powerful combination at the edge.

Photo: Soldier wearables are just one of many edge computing examples.

What are some edge computing examples?

There are several instances of edge computing architectures in military, commercial, and industrial applications today.

Examples of edge computing include various IoT sensors attached to soldier wearables and battlefield systems, systems on offshore oil rigs, modern cars and self-driving vehicles, security and assembly line cameras, virtual assistants, as well as the edge servers that take the data and measurements from these devices and crunch them to provide insights to their users.

We detail each of these examples below:

- Soldier wearables and battlefield systems: The power of edge computing allows for soldier and field data to be collected and processed in real time in what has been termed the “Internet of Military Things” (IoMT) or “Internet of Battlefield Things” (IoBT). This massive family of interconnected devices, ranging from helmets to suits to weapons systems and more, produces a gargantuan amount of contextualized data, including information about a soldier’s physical health and identification data about potential enemy combatants. When lives are on the line, the act of computation needs to take place sooner rather than later. Thanks to edge computing, soldiers can do their jobs in the field safely and more efficiently, resulting in improved national security down the line.

- Offshore oil rigs: Edge computing is a likely solution for remote locations with limited or no connectivity to a centralized data center. Offshore oil rigs are a perfect case in point. With more and more data being collected by Industrial Internet of Things (IIoT) devices, places like offshore oil rigs are generating more data than ever, oftentimes a lot more than their networks can handle. By extending computation closer to the data source using edge devices and servers, an offshore oil rig doesn’t have to worry about latency issues in real-time applications, or transmitting mountains of data across an already-spotty network. By utilizing localized edge computing solutions, an oil rig’s reliance on the computational abilities of a faraway data center is significantly reduced.

- Self-driving vehicles: Autonomous vehicles are a popular example of edge computing due to their need to process data with as little latency as possible. A self-driving vehicle is unable to wait for a distant cloud data center to decide whether it should change lanes or brake for a civilian. These decisions need to happen almost instantaneously to ensure the safety and well-being of persons both on and off the road. An edge computing architecture allows for this sort of real-time decision-making, since latency is being reduced as a result of real-time data processing occurring on the vehicle itself. This sort of integrated computation is also known as point-of-origin processing.

- Security and assembly line cameras: Surveillance cameras in security systems and at production and manufacturing facilities often record a continuous stream of footage, which is then sent to the cloud for processing or storage. To cut down on the amount of stored data, the cloud server may, for example, execute an application that deletes the useless footage and stores the footage that captured certain criteria, such as a person in motion or a defect in a product. Regardless, this results in a lot of bandwidth being used, because every byte of that footage is being transferred to the cloud. But if the cameras are able to perform video analytics themselves and send only the important bits of footage to the cloud, less data would be transmitted across the network, resulting in less bandwidth use and less network traffic.

- Virtual assistants: Virtual assistants that use the cloud for data processing can improve response times by processing requests locally using an edge gateway or server, rather than relying on all the computation to take place at a faraway cloud data center. Our military driver would have received the information he requested a lot faster had his outpost network taken advantage of edge computing's real-time processing capabilities.

What are the benefits of edge computing?

There are four main benefits of establishing an edge computing architecture: reduced bandwidth use, latency reduction, cost savings, and improved security and reliability, especially when supporting next-gen technology like 5G.

We detail each of these four benefits below:

- Reduced bandwidth use: Installing an edge device on or near the data source would allow most of the data to be stored and processed there. This cuts down on the amount of data being transferred to the cloud, which, in turn, reduces the amount of network bandwidth being used by IoT devices.

- Latency reduction: In an edge computing architecture, data from IoT devices doesn’t have to travel great distances to a cloud data center. This reduction in distance reduces processing delays and improves response times, which is ideal for any military, industrial or commercial application in which security, safety or manufacturing efficiency is critical.

- Cost savings: Higher bandwidth usage costs more money. In an edge computing architecture, less bandwidth is being used, which translates directly into dollars saved.

- Improved security and reliability: With edge computing, data is stored and processed at multiple locations close to the source, instead of at a cloud data center, where a single cybersecurity attack or maintenance operation could disrupt the network entirely or result in a widespread release of sensitive data. If data storage and processing are decentralized to edge devices, so, too, are the ramifications of security breaches and maintenance delays. Furthermore, cyberattacks and regular maintenance would be isolated to one or two edge devices, instead of an entire data center.

Consider the scenario from earlier, involving the military vehicle driver and the IoT assistant.

Because there were so many additional IoT devices transmitting data to the cloud, the base's network was temporarily overloaded, causing the driver to experience a delay in response time.

Not to mention, the data required to fulfill all those requests was being computed thousands of miles away at a cloud data center, instead of on an integrated sensor or chip, or on a server at the edge of the network.

These latency issues could have been mitigated if the data was processed using an edge computing architecture.

Instead of running a hefty stream of data to the centralized data center for storage and computation, an edge computing architecture would have allowed the other IoT devices on the network to store and process a portion of the data locally.

In addition, the base would cut down on its operational costs by using less bandwidth, as well as reduce the impact of potential security or maintenance-related issues.

Edge vs. cloud computing: Is the edge replacing the cloud?

Edge computing is unlikely to replace cloud computing entirely.

Applications and devices that don’t require real-time data processing or analysis are likely to still use the cloud for storage and processing.

As the number of IoT devices increases, so, too, will the amount of data that needs to be stored and processed.

If businesses and organizations don’t switch to an edge computing architecture, their chances of experiencing latency in applications requiring real-time computation will increase as the number of IoT devices using their networks increase. In addition, they’ll spend more money on the bandwidth necessary to transfer such data.

Edge computing is an extension, rather than a replacement, of the cloud. And as more and more devices begin to use cloud data centers as a processing resource, it’s clear that edge computing is the future, at least if you want your program or application to function seamlessly, efficiently and affordably.

For more information about acquiring edge computing solutions for your program or application, reach out to Trenton Systems. Our engineers are on standby.

Share this

- High-performance computers (42)

- Military computers (38)

- Rugged computers (32)

- Cybersecurity (25)

- Industrial computers (25)

- Military servers (24)

- MIL-SPEC (20)

- Rugged servers (19)

- Press Release (17)

- Industrial servers (16)

- MIL-STD-810 (16)

- 5G Technology (14)

- Intel (13)

- Rack mount servers (12)

- processing (12)

- Computer hardware (11)

- Edge computing (11)

- Rugged workstations (11)

- Made in USA (10)

- Partnerships (9)

- Rugged computing (9)

- Sales, Marketing, and Business Development (9)

- Trenton Systems (9)

- networking (9)

- Peripheral Component Interconnect Express (PCIe) (7)

- Encryption (6)

- Federal Information Processing Standards (FIPS) (6)

- GPUs (6)

- IPU (6)

- Joint All-Domain Command and Control (JADC2) (6)

- Server motherboards (6)

- artificial intelligence (6)

- Computer stress tests (5)

- Cross domain solutions (5)

- Mission-critical servers (5)

- Rugged mini PCs (5)

- AI (4)

- BIOS (4)

- CPU (4)

- Defense (4)

- Military primes (4)

- Mission-critical systems (4)

- Platform Firmware Resilience (PFR) (4)

- Rugged blade servers (4)

- containerization (4)

- data protection (4)

- virtualization (4)

- Counterfeit electronic parts (3)

- DO-160 (3)

- Edge servers (3)

- Firmware (3)

- HPC (3)

- Just a Bunch of Disks (JBOD) (3)

- Leadership (3)

- Navy (3)

- O-RAN (3)

- RAID (3)

- RAM (3)

- Revision control (3)

- Ruggedization (3)

- SATCOM (3)

- Storage servers (3)

- Supply chain (3)

- Tactical Advanced Computer (TAC) (3)

- Wide-temp computers (3)

- computers made in the USA (3)

- data transfer (3)

- deep learning (3)

- embedded computers (3)

- embedded systems (3)

- firmware security (3)

- machine learning (3)

- Automatic test equipment (ATE) (2)

- C6ISR (2)

- COTS (2)

- COVID-19 (2)

- CPUs (2)

- Compliance (2)

- Compute Express Link (CXL) (2)

- Computer networking (2)

- Controlled Unclassified Information (CUI) (2)

- DDR (2)

- DDR4 (2)

- DPU (2)

- Dual CPU motherboards (2)

- EW (2)

- I/O (2)

- Military standards (2)

- NVIDIA (2)

- NVMe SSDs (2)

- PCIe (2)

- PCIe 4.0 (2)

- PCIe 5.0 (2)

- RAN (2)

- SIGINT (2)

- SWaP-C (2)

- Software Guard Extensions (SGX) (2)

- Submarines (2)

- Supply chain security (2)

- TAA compliance (2)

- airborne (2)

- as9100d (2)

- chassis (2)

- data diode (2)

- end-to-end solution (2)

- hardware security (2)

- hardware virtualization (2)

- integrated combat system (2)

- manufacturing reps (2)

- memory (2)

- mission computers (2)

- private 5G (2)

- protection (2)

- secure by design (2)

- small form factor (2)

- software security (2)

- vRAN (2)

- zero trust (2)

- zero trust architecture (2)

- 3U BAM Server (1)

- 4G (1)

- 4U (1)

- 5G Frequencies (1)

- 5G Frequency Bands (1)

- AI/ML/DL (1)

- Access CDS (1)

- Aegis Combat System (1)

- Armed Forces (1)

- Asymmetric encryption (1)

- C-RAN (1)

- COMINT (1)

- Cloud-based CDS (1)

- Coast Guard (1)

- Compliance testing (1)

- Computer life cycle (1)

- Containers (1)

- D-RAN (1)

- DART (1)

- DDR5 (1)

- DMEA (1)

- Data Center Modular Hardware System (DC-MHS) (1)

- Data Plane Development Kit (DPDK) (1)

- Defense Advanced Research Projects (DARP) (1)

- ELINT (1)

- EMI (1)

- EO/IR (1)

- Electromagnetic Interference (1)

- Electronic Warfare (EW) (1)

- FIPS 140-2 (1)

- FIPS 140-3 (1)

- Field Programmable Gate Array (FPGA) (1)

- Ground Control Stations (GCS) (1)

- Hardware-based CDS (1)

- Hybrid CDS (1)

- IES.5G (1)

- ION Mini PC (1)

- IP Ratings (1)

- IPMI (1)

- Industrial Internet of Things (IIoT) (1)

- Industry news (1)

- Integrated Base Defense (IBD) (1)

- LAN ports (1)

- LTE (1)

- Life cycle management (1)

- Lockheed Martin (1)

- MIL-S-901 (1)

- MIL-STD-167-1 (1)

- MIL-STD-461 (1)

- MIL-STD-464 (1)

- MOSA (1)

- Multi-Access Edge Computing (1)

- NASA (1)

- NIC (1)

- NIC Card (1)

- NVMe (1)

- O-RAN compliant (1)

- Oil and Gas (1)

- Open Compute Project (OCP) (1)

- OpenRAN (1)

- P4 (1)

- PCIe card (1)

- PCIe lane (1)

- PCIe slot (1)

- Precision timestamping (1)

- Product life cycle (1)

- ROM (1)

- Raytheon (1)

- Remotely piloted aircraft (RPA) (1)

- Rugged computing glossary (1)

- SEDs (1)

- SIM Card (1)

- Secure boot (1)

- Sensor Open Systems Architecture (SOSA) (1)

- Small form-factor pluggable (SFP) (1)

- Smart Edge (1)

- Smart NIC (1)

- SmartNIC (1)

- Software-based CDS (1)

- Symmetric encryption (1)

- System hardening (1)

- System hardening best practices (1)

- TME (1)

- Tech Partners (1)

- Total Memory Encryption (TME) (1)

- Transfer CDS (1)

- USB ports (1)

- VMEbus International Trade Association (VITA) (1)

- Vertical Lift Consortium (VLC) (1)

- Virtual machines (1)

- What are embedded systems? (1)

- Wired access backhaul (1)

- Wireless access backhaul (1)

- accredidation (1)

- aerospace (1)

- air gaps (1)

- airborne computers (1)

- asteroid (1)

- authentication (1)

- autonomous (1)

- certification (1)

- cognitive software-defined radios (CDRS) (1)

- command and control (C2) (1)

- communications (1)

- cores (1)

- custom (1)

- customer service (1)

- customer support (1)

- data linking (1)

- data recording (1)

- ethernet (1)

- full disk encryption (1)

- hardware monitoring (1)

- heat sink (1)

- hypervisor (1)

- in-house technical support (1)

- input (1)

- integrated edge solution (1)

- international business (1)

- licensed spectrum (1)

- liquid cooling (1)

- mCOTS (1)

- microelectronics (1)

- missile defense (1)

- mixed criticality (1)

- moving (1)

- multi-factor authentication (1)

- network slicing (1)

- neural networks (1)

- new headquarters (1)

- next generation interceptor (1)

- non-volatile memory (1)

- operating system (1)

- output (1)

- outsourced technical support (1)

- post-boot (1)

- pre-boot (1)

- private networks (1)

- public networks (1)

- radio access network (RAN) (1)

- reconnaissance (1)

- rugged memory (1)

- secure flash (1)

- security (1)

- self-encrypting drives (SEDs) (1)

- sff (1)

- software (1)

- software-defined radios (SDRs) (1)

- speeds and feeds (1)

- standalone (1)

- storage (1)

- systems (1)

- tactical wide area networks (1)

- technical support (1)

- technology (1)

- third-party motherboards (1)

- troposcatter communication (1)

- unlicensed spectrum (1)

- volatile memory (1)

- vpx (1)

- zero trust network (1)

- January 2025 (1)

- November 2024 (1)

- October 2024 (1)

- August 2024 (1)

- July 2024 (1)

- May 2024 (1)

- April 2024 (3)

- February 2024 (1)

- November 2023 (1)

- October 2023 (1)

- July 2023 (1)

- June 2023 (3)

- May 2023 (7)

- April 2023 (5)

- March 2023 (7)

- December 2022 (2)

- November 2022 (6)

- October 2022 (7)

- September 2022 (8)

- August 2022 (3)

- July 2022 (4)

- June 2022 (13)

- May 2022 (10)

- April 2022 (4)

- March 2022 (11)

- February 2022 (4)

- January 2022 (4)

- December 2021 (1)

- November 2021 (4)

- September 2021 (2)

- August 2021 (1)

- July 2021 (2)

- June 2021 (3)

- May 2021 (4)

- April 2021 (3)

- March 2021 (3)

- February 2021 (8)

- January 2021 (4)

- December 2020 (5)

- November 2020 (5)

- October 2020 (4)

- September 2020 (4)

- August 2020 (6)

- July 2020 (9)

- June 2020 (11)

- May 2020 (13)

- April 2020 (8)

- February 2020 (1)

- January 2020 (1)

- October 2019 (1)

- August 2019 (2)

- July 2019 (2)

- March 2019 (1)

- January 2019 (2)

- December 2018 (1)

- November 2018 (2)

- October 2018 (5)

- September 2018 (3)

- July 2018 (1)

- April 2018 (2)

- March 2018 (1)

- February 2018 (9)

- January 2018 (27)

- December 2017 (1)

- November 2017 (2)

- October 2017 (3)

/Trenton%20Systems%20Circular%20Logo-3.png?width=50&height=50&name=Trenton%20Systems%20Circular%20Logo-3.png)

No Comments Yet

Let us know what you think